Mike Nimród: Exploring the field of privacy-engineering (IJ, 2021/2. (77.), 33-39. o.)

1. Introduction

Privacy by Design (PbD) has been defined in many ways by the academia. It was seen as a design philosophy to improve the overall privacy friendliness of IT systems,[1] a competitive business advantage,[2] a set of technical solutions for privacy engineering and ultimately a legal obligation.[3] We notice a transcendence in the regulatory approach towards PbD. The shifting paradigm of the regulatory landscape first proposed these principles as not mandatory guidelines. Later adopted the same regulatory landscape provided these as express legal obligations. The high level principles have been proposed for computer systems in general, but did not provide enough details to be adopted by software engineers when designing and developing applications.[4] This lack of concrete guidelines on the 'how' of the PbD principles was constantly present in discussions. The PbD principles are meant to be technology neutral and therefore their primary goal is to focus on the 'what' and leave the 'how' to the development community. Part of this problem has its source in technicians and designers typically not being fluent in security and privacy.[5] Shapiro described it as:

"They may sincerely want security and privacy, but they seldom know how to specify what they seek. Specifying functionality, on the other hand, is a little more straightforward, and thus the system that previously could make only regular coffee in addition to doing word processing will now make espresso too. (Whether this functionality actually meets user needs is another matter.)"[6]

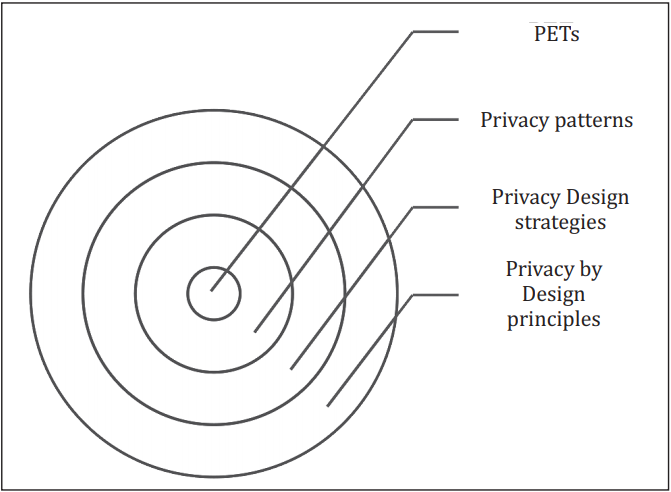

The PbD philosophy, as denoted by researchers, is suffering from guidelines on how to map legal data protection requirements into system requirements and components.[7] As a response, privacy design strategies have been defined.[8] These strategies are often implemented by privacy patterns, which in turn rely on implementation of PETs. Lenhard et al. have summarized the existing literature on privacy patterns recently,[9] whereas Senicar et al have extensively studied PETs.[10] In the following sections, an overview of these principles, strategies, patterns and PETs will be provided. The aim is to present an overall guide to the granularity of PbD. A layered approach is provided in Figure 1.

Figure 1. Layers of PbD.

2. Concept and Origins of PbD

Technology, and its rapid advancement thereof, has increasingly received attention from the field of ethics, which has evolved from being focused on theory to focusing on the sensitivity to values "built in" to technology and the process of doing so.[11] This is how the concept Value Sensitive Design (VSD) was born and was defined by Friedman et al. as the theoretically grounded approach to the design of technology accounts for human values in a principled and comprehensive manner throughout the design process.[12] Klitou affirms that VSD emphasizes the social and ethical responsibility of scientists, inventors, engineers or designers when researching, inventing, engineering and/or designing technologies that have or could have a potentially profound effect (negative or positive) on society and thus can create what is known as the normative technology.[13] PbD is essentially both an extension and application of VSD. The aim of PbD is to develop systems, products and services that are in essence privacy-friendly and not intrusive. The aim of PbD is to give extended control towards users over their personal data and transparency in understanding how these are processed by the named systems, products and services. Hildebrandt and Koops see PbD as the "ambient law" in which the legal norms are articulated within the infrastructure and from a transition is seen from simple legal protection to legal protection by design.[14]

Gaurda and Zannone also articulated PbD as an approach to bridging the difficult gap between legal (natural) language and computer/machine language to develop "privacy-aware systems".[15] One of the goals of PbD, therefore, could be to create devices or systems that are capable of effectively implementing laws and rules that we as humans understand in the form of legal natural language (LNL) and devices, systems, computers, etc. understand in the form of legal machine language (LML).[16] PbD was termed by Kenny and Borking as privacy engineering, describing it as a systematic effort to embed privacy relevant legal primitives into technical and governance design.[17]

Through all the approaches that the research community has produced, one common theme can be identified in terms of PbD being driven by technical solutions rather than organizational approaches. Where in fact informational privacy in general is user-centered and often policy driven, the same cannot be stated for PbD, which is more developer-centered and driven by coding. In any case, PbD is not meant to be the archenemy of innovation. It should not be treated as a barrier towards technological development. In fact, history shows that neither PbD, nor legislation on technology cannot fulfill this role. PbD in reality aims to be a prudent driver of technological development.[18]

3. Delimitations: Lex informatica

Mefford provided in 1997 that the lex informatica would meet the legal needs of netizens[19] much as the lex mercatoria evolved to meet the needs of merchants who found national laws incapable of dealing with the reality of merchant transactions.[20] With the information age, the society has undoubtedly arrived to the Lex informatica, where a prominent question is putting accent on the regulatory role of the technology itself. The reader should note this aversion as well: internet and ICT in general was subject to heavy regulation from their early appearance, whereas nowadays parts of society argue that technology should play a bigger role in regulation and regulatory decision-making. The proponents of this philosophical stance repeatedly confirmed, "code is law". American theorist, Les-

- 33/34 -

sig explained this, as code is ultimately the architecture of the Internet, and - as such - is capable of constraining an individual's actions via technological means.[21] Nuth has provided a comparison between classic legal regulation and Lex informatica where clear distinctions between the two regimes have been identified. The comparison is shown in Table 1.

| Legal regulation | Lex informatica | |

| Framework | Law | Architecture standards |

| Jurisdiction | Physical territory | Network |

| Content | Statutory/court expression | Technical capabilities |

| Source | State | Technologists |

| Customization | Contract (negotiation) | Configuration (choice) |

| Enforcement | Court | Automated, self-execution |

A teljes tartalom megtekintéséhez jogosultság szükséges.

A Jogkódex-előfizetéséhez tartozó felhasználónévvel és jelszóval is be tud jelentkezni.

Az ORAC Kiadó előfizetéses folyóiratainak „valós idejű” (a nyomtatott lapszámok megjelenésével egyidejű) eléréséhez kérjen ajánlatot a Szakcikk Adatbázis Plusz-ra!